GroundCite is an open source tool that helps you control which sites Gemini cites, validates those citations, and lets you exclude unreliable sources. This post explains the problems with Gemini’s default citation behavior, how GroundCite fixes them, and invites you to contribute.

Large language models have changed how we interact with information. But when accuracy and accountability matter, one thing becomes critical: “Relevant, trustworthy Citations”.

If you’re building tools for journalism, academic research, policy analysis, or enterprise reporting, you know that an LLM without reliable grounding is a liability. A missing or irrelevant source can turn a solid output into a trust-breaking one.

Unfortunately, developers using Google Gemini APIs quickly run into some recurring problems.

The Problems with Gemini’s Current Citation Handling

- Irrelevant or Broken Citations

Gemini often provides links that don’t contain the referenced text — or worse, links to 404 pages. - No Source Preference Control

You can’t tell Gemini to prefer specific sites (e.g., icc-cricket.com for sports scores, fda.gov for drug approvals) unless you hardcode the exact domain in the urlcontext. In which case it will look at ONLY that specific url and you lose the generic search feature. - No Source Exclusion

You cannot ask Gemini to ignore a specfic site/category of sites. Even when you explicitly say “do not use Wikipedia or xyz site or crowdsourced content,” Gemini still refers wherever it gets the data

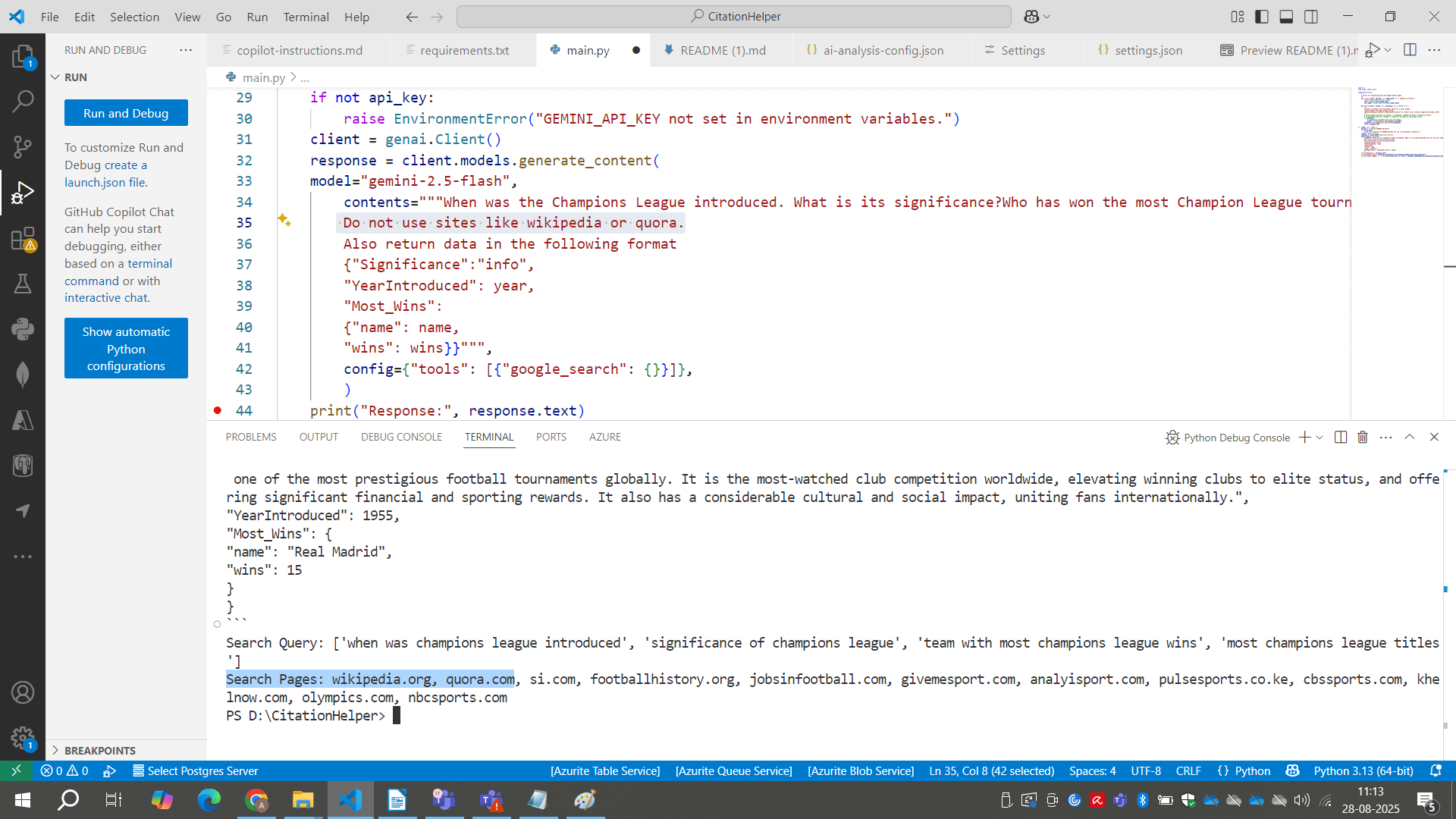

In the example below notice the instruction given and the sources cited

Prompt:- “””When was the Champions League introduced. What is its significance? Who has won the most Champion League tournaments? Do not use sites like wikipedia or quora. Also return data in the following format {“Significance”:”info”,”YearIntroduced”: year,”Most_Wins”: {“name”: name,”wins”: wins}}”””

Response:The Champions League, originally known as the European Champion Clubs’ Cup, was first introduced in 1955. The competition was later rebranded and took on its current name, UEFA Champions League, in the 1992-1993 season, incorporating a round-robin group stage.It is widely regarded as the most prestigious club competition in European football and one of the most prestigious football tournaments globally.Real Madrid holds the record for the most Champions League titles, having won the tournament 15 times

“`json

{

“Significance”: “The Champions League is considered the most prestigious club competition in European football and one of the most prestigious football tournaments globally. It is the most-watched club competition worldwide, elevating winning clubs to elite status, and offering significant financial and sporting rewards. It also has a considerable cultural and social impact, uniting fans internationally.”,

“YearIntroduced”: 1955,

“Most_Wins”: {

“name”: “Real Madrid”,

“wins”: 15

}

}

“`

Search Pages: wikipedia.org, quora.com, si.com, footballhistory.org, jobsinfootball.com, givemesport.com, analyisport.com, pulsesports.co.ke, cbssports.com, khelnow.com, olympics.com, nbcsports.com

4. No Metadata in JSON Structure Outputs

When we ask Gemini to return data in a JSON structure, it does not attach citations directly to the JSON fields. Here’s how it behaves in different cases:

- Using response_schema directly → Gemini produces JSON, but the GroundingMetadata returned from Google is always empty. So no Citations.

- Embedding the response schema in the instructions → Same result, GroundingMetadata remains empty. Again No Citations

- Without schema, using an inline JSON-like format in the instructions ( like in the example above) → Gemini does return GroundingMetadata, but it applies only to the narrative (free-text explanation). The JSON block itself receives no citations.

In the Champions League above , citations are attached only to the narrative (e.g., “The Champions League, originally known as the European Champion…”), but not to the JSON fields. So it’s not possible to assign the same citations directly to JSON values.

5. Weak Inline Referencing

Citations often show up at the end as a block of links, with no clear inline mapping to the actual statements in the output. This makes fact-checking slow and painful.

What “GroundCite For Gemini” will enable

We’re building an open source project that acts as a smarter, configurable grounding layer for Google Gemini APIs.

- Allow configurable source preferences — Make the model prefer categories and sites like .gov, .edu, or official organizational domains.

- Support configurable exclusions — blacklist unreliable or unwanted domains/sites permanently.

- Validate citations — ensuring the cited page is live, accessible, and contains the relevant content. Configure removal of chunks with invalid citations

- Validation to check that result returned is relevant to the query and not the “Next best”.

- Add inline numbered citations — so every fact in the output maps back to a verifiable source.

- Enable JSON outputs with citations — by enriching text first, then parsing into structured form.

- Configurable Strategy:- You control which models to use for JSON Parsing, what level of strictness to create for including/excluding sites and confidence levels for citations

Why This Matters

Projects where citations are mission-critical — think compliance reports, academic references, medical content, or even live sports coverage — need a higher bar for accuracy.

Right now, Gemini offers speed and scale but not enough control. Our project bridges that gap, making it safer to build apps where truth and trust can’t be optional.

GroundCite FAQs

Discover GroundCite’s capabilities: Check out our FAQs for detailed insights

https://www.cennest.com/groundcite-frequently-asked-questions-faq/

Related Articles

Call for Collaboration

This is an open project. We’re looking for developers, researchers, and anyone frustrated by current LLM citation handling to join the discussion.

We want to hear:

-

-

- What citation issues you’ve faced with Gemini or other LLMs.

- What controls you’d like over source inclusion/exclusion.

- How inline citations should be structured for your workflows.

-

If citations matter to your work, we’d love your input. Together, we can make the search results not just smart — but trustworthy.

Please reach out to anshulee@cennest.com and we would love to hear your inputs/experiences/asks.

Github Repo:- https://github.com/cennest/ground-cite

Play with the live demo at https://groundcite.cennest.com/

Team Cennest